Introduction to GPU Compute Pipelines

You may have heared about Compute pipelines as an important concept in handling complex computational tasks. But what exactly are compute pipelines?

At their core, these are systems designed to process data through a series of steps or stages, each specialized for a specific function. This approach not only streamlines processing but also enhances efficiency and scalability. As demand grows for faster and more efficient data processing grow, understanding the role and mechanics of compute pipelines becomes essential. They’re particularly crucial in environments where large-scale data handling and real-time processing are commonplace, such as in big data analytics, machine learning projects and high-performance computing scenarios.

Before we explain the QtQuickComputeItem, we’ll dive into the world of compute pipelines, unraveling their necessity, structure and applications.

The Need for Compute Pipelines

In today’s technological realm, the complexity and volume of data processing tasks are increasing even on embedded devices. Traditional methods of handling these tasks often done on the CPU and fall short in terms of efficiency, speed and power consumption. While, on the other hand, modern embedded devices often include powerful GPUs which stays underutilized. This is where compute pipelines come into play, offering a structured solution to manage intricate computing processes running efficiently on your GPU.

Streamlining Complex Tasks: The essence of a compute pipeline is its ability to break down complex processes into manageable stages. Each stage in the pipeline is designed to perform a specific task or set of tasks, allowing for more focused and efficient processing. For instance, in a machine learning workflow, different stages may include data collection, preprocessing, model training and prediction.

Enhancing Efficiency and Productivity: By segmenting tasks, compute pipelines enable parallel processing. This not only speeds up the overall process but also improves resource utilization. In scenarios like video processing, scientific simulations or the processing of large datasets, the ability to process multiple data streams simultaneously is invaluable.

Dealing with Scalability and Flexibility: Another significant aspect of compute pipelines is their scalability. As the volume of data or the complexity of tasks increases, pipelines can be scaled to meet these demands without a complete overhaul of the system. This adaptability makes them ideal for businesses and research environments where data requirements can change rapidly.

Through these capabilities, compute pipelines address the core challenges of modern computing environments. They not only offer a more efficient way to process data but also bring a paradigm shift in how we approach complex computational tasks.

Components and Structure of Compute Pipelines

Understanding the components and structure of compute pipelines is crucial for appreciating their functionality and efficiency. Typically, a compute pipeline consists of various interconnected components, each playing a distinct role in the data processing journey.

Key Components:

- Data Source: The beginning of any compute pipeline. This could be databases, live data feeds, file systems, or even real-time user input.

- Processing Stages: These are the heart of the pipeline. Each stage is responsible for a specific type of processing, like data cleansing, transformation, analysis, or complex algorithms. The stages are often modular, allowing for easy updates or changes as needed.

- Data Storage: Temporary or permanent storage components hold data between stages or after the final output. This can range from in-memory storage for fast access to more durable solutions like databases or cloud storage.

- Management and Orchestration Tools: Essential for coordinating the various stages, especially in pipelines with numerous or complex processes. These tools ensure data flows smoothly from one stage to another and manage resources effectively.

Structural Aspects:

- Sequential vs. Parallel Processing: While some pipelines process data sequentially (one stage after another), others operate in parallel, processing multiple data streams simultaneously.

- Scalability: Pipelines are designed to scale up or down, depending on the workload. This flexibility is crucial for adapting to varying data volumes or processing needs.

- Error Handling and Recovery: Robust compute pipelines include mechanisms to handle failures or errors at any stage, ensuring data integrity and continuous operation.

By combining these components and structural considerations, compute pipelines offer a versatile and robust framework for handling diverse data processing tasks. From simple data transformation to complex analytical processes, the architecture of compute pipelines makes them indispensable tools in today’s data-driven world.

Use Cases and Advantages of Compute Pipelines

Compute pipelines have a wide array of applications across various industries and fields. Understanding these use cases, along with their inherent advantages, provides insight into why they are so integral in modern computing.

Diverse Applications:

- Big Data Analytics: Pipelines are fundamental in managing and processing large datasets for insights, particularly in fields like marketing, finance and healthcare.

- Machine Learning Workflows: From data preparation to training and inference, compute pipelines streamline every stage of the machine learning lifecycle.

- Media Processing: In the media industry, pipelines are used for tasks such as video encoding, rendering and complex visual effects.

Key Advantages:

- Efficiency and Speed: By allowing for parallel processing and reducing redundant operations, compute pipelines significantly speed up data processing tasks.

- Scalability: Whether it’s handling a surge in data or expanding the scope of processing, pipelines can adapt flexibly, making them ideal for growth and change.

- Error Handling and Reliability: Well-designed pipelines include mechanisms for error detection and recovery, ensuring consistent and reliable data processing.

- Enhanced Collaboration and Productivity: Pipelines often include tools for monitoring and collaboration, which enhances team productivity and facilitates better project management.

Through these use cases and benefits, compute pipelines simplify complex processes and can pave the way for further innovation and enhanced performance in a wide array of computing tasks.

Conclusion on GPU Compute Pipelines

As we’ve seen throughout this first overview, compute pipelines are more than just a technical convenience; they can be a fundamental component in the modern computing landscape. Their ability to efficiently process and manage data makes them important in a world where the volume, velocity and variety of data are constantly increasing. On embedded devices they can help to utilize unused system recourses, reduce cpu load and improve performance.

So, let us ask ourself, wouldn’t it be nice to use them in QtQuick? Wouldn’t it be nice to use them at the same ease as you can use a fragment shader or vertex shader?

The birth of QtQuickComputeItem

For a very long time, Qt Quick has provided shader integration: You can write fragment and vertex shaders and load them with a Qt Quick ShaderEffect item (https://doc.qt.io/qt-6/qml-qtquick-shadereffect.html) to your application. QML properties are seamlessly mapped to uniform inputs in the shader code. This is often used for applying graphical effects on top of ui components.

Our QtQuickComputeItem integrates a cross-platform interface to run a compute pipeline in a Qt Quick application, with the same ease as you can integrate a fragment or vertex shader. The QtQuickComputeItem is OpenSource and can be found in our github. Along with the items comes an example that we want to discuss here. Just go ahead to github to get startet and to try it out.

You can find a comprehensive introduction to compute shaders in the Khronos wiki (https://www.khronos.org/opengl/wiki/Compute_Shader), from which we would like to quote the following paragraph:

The [compute] „space“ that a compute shader operates on is largely abstract; it is up to each compute shader to decide what the space means. The number of compute shader executions is defined by the function used to execute the compute operation. Most important of all, compute shaders have no user-defined inputs and no outputs at all. The built-in inputs only define where in the „space“ of execution a particular compute shader invocation is.

Therefore, if a compute shader wants to take some values as input, it is up to the shader itself to fetch that data, via texture access, arbitrary image load, shader storage blocks, or other forms of interface. Similarly, if a compute shader is to actually compute anything, it must explicitly write to an image or shader storage block.“

Installation of the QtQuickComputeItem

Starting is easy: Clone the github repo, compile the plugin and set the environment variable with the import path:

git clone git@github.com:basysKom/qtquickcomputeitem.git

mkdir build_qtquickcomputeitem

cd build_qtquickcomputeitem

cmake ../qtquickcomputeitem

make

export QML_IMPORT_PATH=$(pwd)/lib/ Run one of the examples:

cd examples/basic_storage

./qmlbasicstorage This example outputs an animated particle starfield. The starfield example is used in this blog post to demonstrate how to use the QtQuickComputeItem.

An overview

The QtQuickComputeItem supports both, storage buffers and images as inputs and outputs.

In compute shaders, the input data is partitioned into three-dimensional blocks or computation groups, each specified with dimensions in x, y and z directions. These group sizes are defined within in the shader code. The actual number of groups in each direction (x, y and z) is configured through QML properties. Prior to exploring the QML aspect, we delve into the shader part:

#version 440

layout (local_size_x = 256) in;

struct Particle

{

vec4 pos;

vec4 color;

};

layout(std140, binding = 0) buffer StorageBuffer

{

Particle particle[];

} buf;

layout(std140, binding = 1) uniform UniformBuffer

{

float speed;

uint dataCount;

} ubuf;

void main()

{

uint index = gl_GlobalInvocationID.x;

if (index < ubuf.dataCount) {

vec4 position = buf.particle[index].pos;

position.x += ubuf.speed;

if (position.x > 1.0) {

position.x -= 2.0;

}

// update

buf.particle[index].pos = position;

buf.particle[index].color = vec4(position.z, position.z, position.z, 1.0);

}

} Here the local group size is 256 in the x direction and 1 in all other directions. A single storage buffer which is bound at binding point 1 is used as input and output.

Each entry in the buffer consists of a position and a color. The code is just updating the x coordinate part of the position and computes a color from the depth (z) value. This results in a flowing star field:

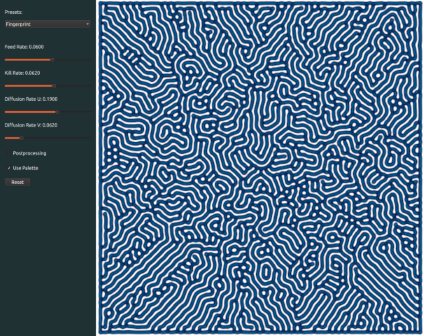

A compute shader designed to process two-dimensional data, such as images, could specify its local sizes, inputs and outputs like this, for example:

layout (local_size_x = 32, local_size_y = 32) in;

layout (binding = 1, rgba8) uniform image2D inputImage;

layout (binding = 2, rgba8) uniform image2D outputImage; So, assuming an 320×320 image, you would dispatch 10 workgroups in both the x and y dimensions to cover it entirely with a local size of 32×32.

After covering the basics of writing a compute shader, we can now create the ComputeItem in QML

ComputeItem {

id: computeItem

property real speed: 0.005

property int dataCount: 100 * 256

computeShader: ":/shaders/computeshader.comp.qsb"

dispatchX: computeItem.dataCount / 256

buffers: [

StorageBuffer {

id: storageBuffer

Component.onCompleted: initBuffer()

}

]

Component.onCompleted: computeItem.compute()

} The computeShader property points to the shader file in qsb format (more on this later). The dispatchX, dispatchY and dispatchZ properties specify the number of working groups in each direction: In this example, 100 groups are dispatched, each with a size of 256, as defined in the compute shader. The group sizes in the y and z directions are set to 1 (the default value).

The speed and dataCount properties are automatically transferred to the uniform buffer. Currently only basic types such as integers, floats and boolean values are supported. Please note, that the properties have to be declared in the same order as in the uniform buffer.

The buffers property expects a list of StorageBuffer and ImageBuffer items, with each item corresponding to a buffer (or image) bound in the compute shader. On the C++ side the buffers are represented as QByteArrays. It is possible to initialize them with a bit of JavaScript, employing an ArrayBuffer. Here is the initBuffer method for initializing the buffer data:

function initBuffer() {

const dataSize = 8 * computeItem.dataCount; // 8 entries per point: x pos, y pos, depth, unused, rgba color

const bufferSize = 4 * dataSize; // four bytes per entry

let buffer = new ArrayBuffer(bufferSize);

let view = new Float32Array(buffer);

let i = 0;

let coordXY = 0;

while(i < dataSize) {

view[i + 0] = Math.random() * 2.0 - 1.0; // x

view[i + 1] = Math.random() * 2.0 - 1.0; // y

view[i + 2] = Math.random(); // depth

view[i + 3] = 0.0; // unused

// we just add a black particle here, the real particle color is set in the compute shader

view[i + 4] = 0.0; // r

view[i + 5] = 0.0; // g

view[i + 6] = 0.0; // b

view[i + 7] = 1.0; // a

i += 8;

}

storageBuffer.buffer = buffer;

} The data is uploaded to and kept in the GPU memory, so it is not possible to query the positions later.

Displaying the results

After calling computeItem.compute(), the compute shader is executed in sync with the scene graph render loop, the positions are updated and the particle stars are flowing from left to right, from left to right, from left … What’s missing of course, is the visualisation of the result. The Qt Quick ComputeItem plugin comes with two items that are able to render the updated buffers and images to the screen: StorageBufferView is able to render storage buffers and ImageBufferView is the corresponding item for images.

StorageBufferView {

id: view

focus: true

anchors.fill: parent

computeItem: computeItem

resultBuffer: storageBuffer

numberOfPoints: computeItem.dataCount

pointSize: 2.0

} The StorageBufferView needs to know the storageBuffer it should render and the ComputerBuffer item this storage buffer is associated to. The property numberOfPoints limits the amount of points that should be rendered, the pointSize controls the size of the particles on the screen. The renderer expects a certain format: The first two values are reserved for the x and y coordinates and have to have a length of four bytes. The next eight bytes can be used for custom data; the last 32 bytes contain the color values in rgba format.

Baking the shader

Due to Qt’s cross-platform nature, the shader code needs to run on platforms that are using different graphic apis (Direct3D on Windows, Metal on Apple, etc. …). Qt’s answer to the heterogenous world of shader languages is the Qt Shader Baker (qsb – https://doc.qt.io/qt-6/qtshadertools-qsb.html). Shader code is written in modern GLSL. Qsb compiles it to SPIR-V which is then transpiled to shaders for the different graphic backends (like metallib for Apple platforms). Finally, the transpiled shaders are baked into one single qsb file that can easily be loaded from QML (see the example above).

The baking is automated by using cmake:

qt6_add_shaders(${PROJECT_NAME} "qmlbasicstorage_shaders"

GLSL "430"

HLSL 50

MSL 12

BATCHABLE

PRECOMPILE

OPTIMIZED

PREFIX

"/"

FILES

"shaders/computeshader.comp"

) It’s important to set the GLSL version to at least 440 as a compute pipeline needs at least OpenGL 4.3.

Future directions

Compute shaders become intriguing when tasked with processing huge blocks of data, where the intention is to render the results afterward. More complex examples than the one presented in the blog post are physical simulations or preprocessing of large datasets that are going to be rendered later on.

In the future, it would be interesting if the ComputeShaderItem were part of a larger processing pipeline. This would introduce items responsible for data generation to initialize storage buffers and for various visualization nodes to render results. Integration into the Qt Quick ShaderEffectItem would also be intriguing, enabling the storage buffers to be directly utilized as inputs for vertex shaders.

Conclusion

Integrating compute shaders in Qt Quick application may still feel somewhat low-level as compute shaders are operating with abstract data that is only interpretable in context. However, leveraging the power of Qt Quick, we’ve demonstrated the ease of prototyping compute pipelines. With QML, updating uniform variables by setting customized properties and defining storage/image buffers for shaders is seamless. We provide two rendering options, but custom visualizers can also be straightforwardly implemented if the buffers require more complex interpretation than simple particle points.